Transformers

Context

- It's important to take context into account. If not, a word that has many meanings will not be correctly used.

- Example the word "bank" can be a building or sand in a river

- Without context, it will be difficult to make translations and other operations

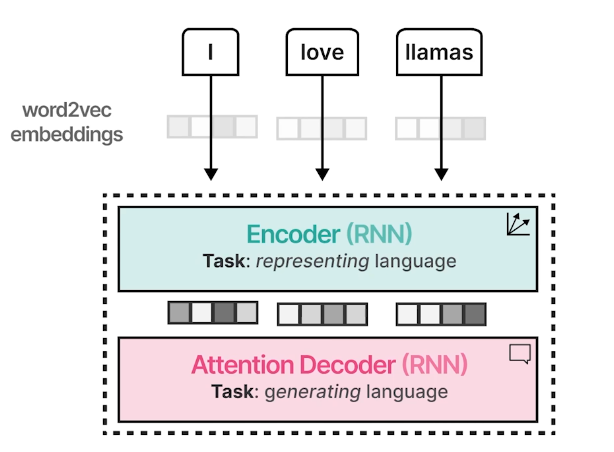

Encoder / Decoder

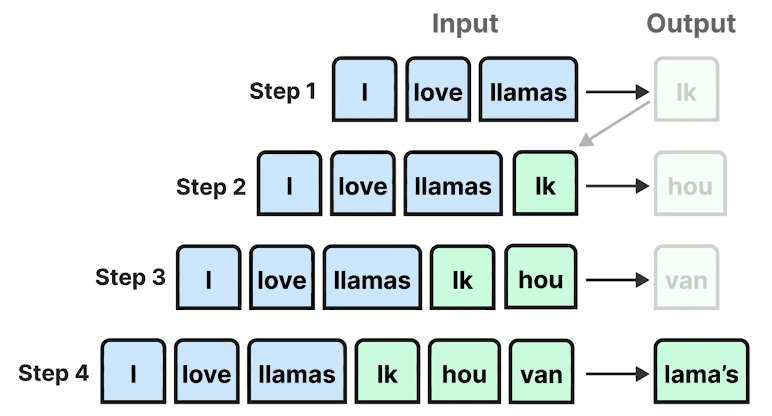

- Encoding : representing an input sentence into embeddings

- Decoding : generating an output sentence

Word2Vec

- Word2Vec will use a context embedding for all the text. This will not be very useful for longer documents.

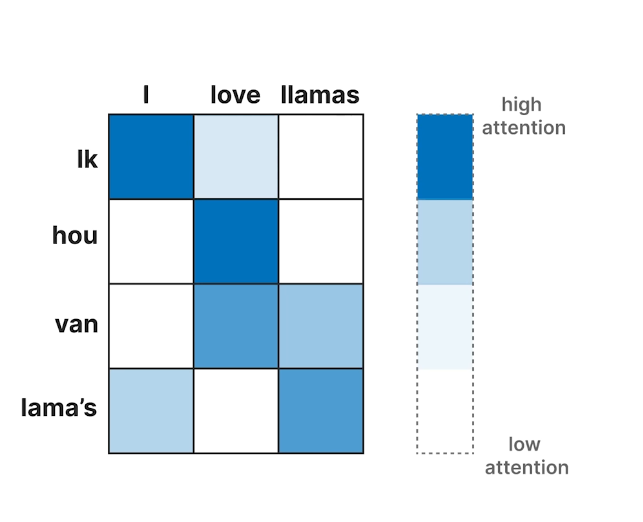

Attention

Identifies the relationship between words of the input to catch context

Instead of passing only a context embedding to the decoder, the context for each token is passed